Last December I passed the GCP Data Engineer exam and got my certification as a late Christmas gift! As for AWS Big Data specialty, I would like to share with you some feedback from my preparation process. Spoiler alert: I did it without any online course!

Data Engineering Design Patterns

Looking for a book that defines and solves most common data engineering problems? I wrote

one on that topic! You can read it online

on the O'Reilly platform,

or get a print copy on Amazon.

I also help solve your data engineering problems 👉 contact@waitingforcode.com 📩

Motivation

Some of you asked me why did I need another certification? There are multiple reasons for that. First of all, I had a (too) short experience on GCP, mostly oriented to BigQuery for processing and storage, GKE for Apache Airflow, and GCS. Before starting that project, I was hoping to get practical experience on Dataflow, BigTable and/or Pub/Sub, but unfortunately, I didn't have luck this time (to put it short). Nonetheless, this "dissatisfaction" feeling was still there, and at the beginning of 2020, I told myself that getting the certification will be the first step to feel better (and working with them will be the next one ;)).

What else? I want to get a better theoretical and practical insight into 3 major cloud providers' data services. I'm sure that the certification - and rather the preparation process - will partially help me achieve this goal. Of course, another part will come from real projects.

Lastly, I also wanted to measure the effort needed by a data engineer comfortable with another cloud (AWS) to pass it.

Preparation

As you can see, my primary goal was to learn and, as a side effect, get certified. I started the process in June, so 6 months before the planned exam (postponed twice, initially planned in October). After a quick research, I found that getting the "Official Google Cloud Certified Professional Data Engineer Study Guide" will be the best thing to start. I used it to recall some basic concepts and to discover the learning path since it's pretty clearly explained in every chapter. I worked on it every Saturday and Sunday morning, for 2 months.

Thanks to the book, I got a better idea of the exam expectations and listed the services I needed to learn. After that, I started to work on the documentation, one service at a time. In this work, I focused mainly on the "Concepts" and "How to" guides part. I left the "Tutorials" section for the last weeks of the preparation, but I didn't have enough time to go through all of them. To be honest. I only "worked" the ones that presented some still mysterious concepts.

In addition to the documentation, I also read the GCP official blog posts for data, networking, storage, and ML topics, and watched some Google Cloud Next videos, still on the same topics.

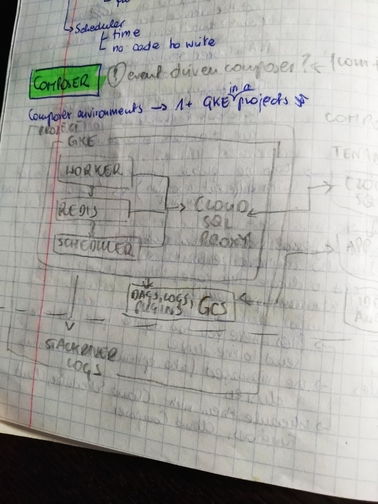

In every of these analysis steps, I took some notes by hand (I'm feeling old whenever I write this 👴). The handwriting helps me memorize things faster and also offers better flexibility for drawing. The drawn schemas and diagrams were very fast reminders of the important concepts during the revision, like the below example illustrating the components used in Cloud Composer:

And what about the online courses? I tried one on Coursera but didn't continue since I wanted to discover all this on my own and be sure I didn't miss anything - even the things not required for the exam. But overall, I had a good learning experience, and if you want to follow this path, it's probably the good one as well!

Instead of the online courses, I needed some practice tests to identify the empty spaces in my brain. I started by the tests provided with the "Official Google Cloud Certified Professional Data Engineer Study Guide" and completed them with Whizlab's practice tests (I recommend both of them!). I discovered later that the emptiest spaces were mostly related to Machine Learning. Unlike AWS Big Data specialty, I had a feeling that GCP Data Engineer requires a bit more knowledge on the data science part, not only for the deployment but also for the models and quality issues (if you wake me up at 2AM, I will certainly be able to explain the regularization to you). BTW, I also prepared a practice test for you from the handwritten notes - you can try it right now on Udemy.

After identifying the ML issues, I put a stronger accent on them during my doc and video analysis, assigning about 30% of the time to these topics in every learning session. For the learning sessions after the first 2 months, I continued on the weekend morning schedule and tried to complete it whenever I had some time in the morning during other days (remote work gave me back 2 hours, I got some extra time to spend for the certification :))

Tips

For the preparation part, identify your problematic domains as soon as possible and spend time improving them. The easiest way to do so is to solve practice tests since they can point out what you miss. But if you don't want to solve them or do it at the end of the preparation cycle, you can also challenge yourself when you watch a new lesson or read a new documentation page. If something is unclear, it probably means that it's a part of a much wider domain that you don't master enough.

For these not enough mastered domains, I created a Google Docs file to prioritize all concepts by the weakness level. Of course, I tried to eliminate the ones with the strongest level first. But I didn't focus only on them. I was often reorganizing the backlog after getting some knowledge (aka reducing the weakness level) not to get the knowledge on one part and miss it on another.

For the exam itself, I used the same strategy as for AWS Big Data specialty, namely solving questions per micro-batches (you know me, I love Apache Spark 🥰). To be more precise, I started with the first 10 questions, skipped the next 10, and continued like that until the end.

For the questions part, I also followed my previous strategy, i.e., divided questions into "easy", "hard" and "I don't know" categories. For the 2 last categories, I marked the related questions as "To review". After answering them for the first time, I returned to the marked questions and spent some time on each of them. Fortunately, I got some of them right since in the end I passed the exam!

That was all for the GCP. The next step in my data engineering cloud journey will be a richer GCP project and Azure DPS 200 and 201 certifications to discover the things I couldn't see during my previous Azure projects.

PS. Fun fact. Few weeks after scheduling the AWS Big Data specialty, I saw that there will be a new exam instead. And it happens to me once again for Azure! DP-200 and DP-201 will be replaced by DP-203 🤦♂️

Consulting

With nearly 17 years of experience, including 9 as data engineer, I offer expert consulting to design and optimize scalable data solutions.

As an O’Reilly author, Data+AI Summit speaker, and blogger, I bring cutting-edge insights to modernize infrastructure, build robust pipelines, and

drive data-driven decision-making. Let's transform your data challenges into opportunities—reach out to elevate your data engineering game today!

👉 contact@waitingforcode.com

🔗 past projects