Databricks Asset Bundles (DAB) simplify managing Databricks jobs and resources a lot. And they are also flexible because besides the YAML-based declarative way you can add some dynamic behavior with scripts.

Data Engineering Design Patterns

Looking for a book that defines and solves most common data engineering problems? I wrote

one on that topic! You can read it online

on the O'Reilly platform,

or get a print copy on Amazon.

I also help solve your data engineering problems 👉 contact@waitingforcode.com 📩

When I faced the scripts for the first time, my past caught me and made me think of them as about hooks. Hooks are pretty common constructs in the software engineering space that let you add an extra behavior to your action. For example, in Git if you need to allow only commits with correctly formatted files, you can define a linter as a pre-commit hook to your local Git repo setup. It's always better to discover those kinds of issues locally instead of going back and forth with the CI/CD workflow.

Now, what's the point with DABs? Even though the bundles cover more and more Databricks resources, as of this writing there are still a few things that are not covered. You can create a Unity Catalog schema but you won't be able to manage the tables. I know, there are other ways to manage them but sometimes you might like to couple the tables management with the workflows managing them as a monorepo project. Another aspect, directories on the volumes. You can declare a new volume on DABs, but you won't be able to create any directories in the YAML. Again, you could do this directly from the workflow, or by running a resources setup notebook but you might also want to keep all this within the DAB scope.

Tables and directories are only two examples where the scripts resource can help you. To see how, let's focus on the directories creation problem. Let's suppose we have a job that triggers on a new file created in the volume but the trigger is based on a directory called new_input. The problem is, this directory doesn't exist yet and consequently, the trigger will never work:

resources:

jobs:

new_files_handling_job:

name: new_files_handling_job

trigger:

file_arrival:

url: '/Volumes/wfc/files/new_input/'

Scripts

In simple terms, scripts are resources that you can execute with bundle run command. A big advantage of them is the inherited authentication that simplifies the script declaration a lot. For example, to create a directory in our files volume, we simple have to initiate a new WorkspaceClient and call the create_directory function:

workspace_client = WorkspaceClient()

workspace_client.files.create_directory('/Volumes/wfc/files/new_input)

Let's call this script create_dirs.py and include it as a part of our source code to access other modules easier. In order to make it work with DAB, you need to declare the create_dirs.py in the scripts resource as follows:

# databricks.yml

scripts:

create_dirs_on_volumes:

content: poetry run src/scripts/create_dirs.py

Now, you can run it by simply invoking databricks bundle run create_dirs_on_volumes -t ${env}. I know, you will tell me it's not how the hooks really behave. The hooks are declared and don't require an explicit execution as the DABs scripts. That's true, but if you have a CI/CD pipeline behind, you can simulate the hook-like behavior by decorating the bundle deployment with scripts execution:

databricks bundle deploy -t ${env}

databricks bundle run create_dirs_on_volumes -t ${env}

Troubleshooting

The scripts are easy to set up but you might face one or two problems with them. The first one is this error:

[Errno 8] Exec format error: 'src/scripts/create_dirs.py'

One of the reasons for this exec format error is a missing shebang line in the beginning of the file. So, adding one for Python (e.g. #!/usr/bin/env python3) should solve the problem.

Another problem is variables substitution. Sometimes, you might need to reference some other DAB variables in the script, for example by passing them as input arguments:

poetry run src/scripts/create_dirs.py ${workspace.current_user.userName}

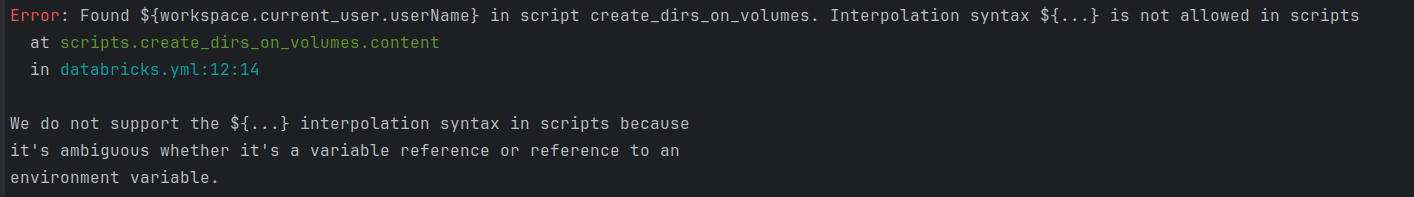

When you try to run our script declared that way, DABs will gently inform you it's not the supported way:

Your other try might be then:

databricks bundle run create_dirs_on_volumes -t dev ${workspace.current_user.userName}

# or

databricks bundle run create_dirs_on_volumes ${workspace.current_user.userName} -t dev

Unfortunately, for both tries you will see this:

bash: ${workspace.current_user.userName}: bad substitution

As of today I haven't found the solution for this variable substitution issue. That being said, it wasn't a serious problem for my scripts but I wanted to keep it here just in case it might be for you.

Next steps?

At the moment the Databricks community discusses a possibility to add a native pre- and post-hooks to the DABs. If you want to follow the discussion, bookmark this Github issue about Support for post deploy hooks.

If you don't want to follow the discussion but want to know a way to implement pre-deploy hooks without scripts, you will also find the answer in the Github issue ;) And who knows, maybe in a few months I will be rewriting my scripts with hooks, sharing with you other lessons learned?

Consulting

With nearly 17 years of experience, including 9 as data engineer, I offer expert consulting to design and optimize scalable data solutions.

As an O’Reilly author, Data+AI Summit speaker, and blogger, I bring cutting-edge insights to modernize infrastructure, build robust pipelines, and

drive data-driven decision-making. Let's transform your data challenges into opportunities—reach out to elevate your data engineering game today!

👉 contact@waitingforcode.com

🔗 past projects