While I was writing about agnostic data quality alerts with ydata-profiling a few weeks ago, I had an idea for another blog post which generally can be summarized as "what do alerts do in data engineering projects". Since the answer is "it depends", let me share my thoughts on that.

Data Engineering Design Patterns

Looking for a book that defines and solves most common data engineering problems? I wrote

one on that topic! You can read it online

on the O'Reilly platform,

or get a print copy on Amazon.

I also help solve your data engineering problems 👉 contact@waitingforcode.com 📩

Alerts vs. data guards

Before I give you some details on the answer, I owe you a few words of introduction. In the context of this blog post, the first thing is to know the difference between the alerts and guards. The easiest way to grasp it is to locate them in a data processing timeline. Guards occur before performing any work on the dataset, i.e. they can prevent the work from being done if some conditions are not met. Alerts, on another hand, are more a post-processing component that triggers after the fact, for example after a failure of a job. Consequently, they won't prevent any damage but they will keep you up-to-date with the work in progress by notifying you about anny unexpected behavior.

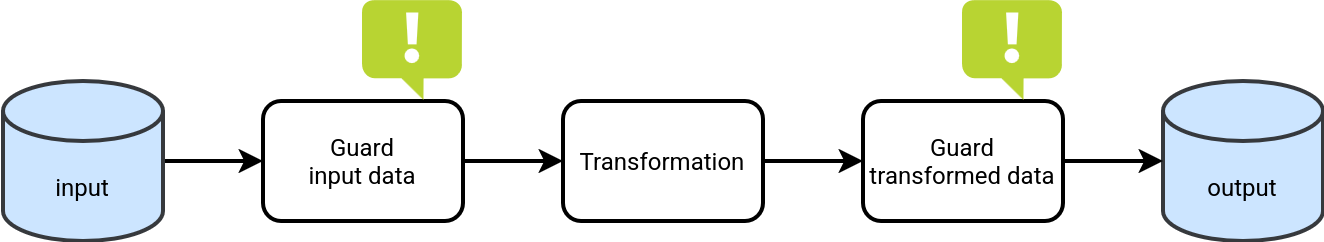

The world would be too nice if we could stop on this definition, though. However, it's not the case because an alert is a natural consequence of a guard evaluation. Why? Let's imagine the pipeline like in the next diagram:

As you can see, whenever a guard evaluates the dataset as invalid, it emits an alert to notify the pipeline owners about an issue. Without this coupling, you - as a pipeline owner - wouldn't be aware of broken things as long as the consumers of your output don't notify you. You certainly agree with me, it's better to be reactive and build trust towards your data rather than being passive and waiting for bad things to come to you.

Long story short, alerts and guards are different but the guards nourish alerts. More exactly, the guards nourish data quality types of alerts.

Use cases

Alerts are a consequence of the guards but their application scope is much wider than the data quality-related issues. You can use the alerts to be notified about:

- Any job or task issues. Thankfully, many data engineering platforms provide native alerting capabilities at this level. For example, if you configure your jobs on Databricks with Databricks Asset Bundles, you can set an email alerting in case of failure via email_notifications.on_failure setting.

- SLA misses. If you expect your batch job to complete within a specified time, you can also configure the expected completion time for the job. In that case, the job will trigger an alert whenever its duration passes the configured threshold. If it's not supported in your data orchestrator workload, you configure the max execution time of the job and leverage the alert configuration for job failures to be notified about the execution timeout signifying the SLA miss.

- Hardware issues. This type of alerts - but it doesn't reduce the application scope - is particularly useful for long-running jobs like the streaming ones. As they are running continuously, the risk of filling the disk space up is pretty high. If you are unlucky, you may even occupy the whole disk space just with...logs (more experienced from you should remember problems like the SPARK-22783).

- Maintenance operations. These alerts are often not related to your data engineering space but more to the cloud provider. Whenever there is a maintenance operation, a breaking change, or an end of life for a version of the cloud resources you use, the cloud provider sends you an alert. That way you can take any preemptive action to ensure nothing will be broken after that change.

- External dependencies unavailability. If your pipelines interact with external dependencies such as APIs a lot, you may have implemented a system of probes that verifies the availability of the dependencies at some regular schedules. Naturally, if one of the dependencies is not available, you can be alerted before you see your pipelines failing. Having the alerts on the external dependencies gives more possibilities to handle the issue, for example by redirecting the requests to other APIs.

- Data governance issues. A great example here is schema drift, so the situation when the schema of the dataset you're supposed to process changes over time. The change doesn't need to be a sign of the failure of your processing logic but simply a sign of a missing data opportunity. For example, it can be a new column added to one of the tables that might enable new business insights.

- Security issues. This is another interesting part not necessarily related to the data processing itself (to which you, as a data engineer, must devote some special love!). Any unsuccessful login attempts, unauthorized access requests are suspicious activities. Naturally, it's better to be aware of them. To implement this part you'll need to deliver the audit logs data and analyze them to detect any risked actions.

- Data quality issues. That's the use case from the starting point of this blog post. The data quality alerts notify you about datasets not respecting the expected quality, such as unbalanced or missing values, or yet too small overall data volume.

I was not technical this time but hopefully, with the examples from the second section I could convince you the alerts are intrinsic parts of a mature data engineering project.

Consulting

With nearly 17 years of experience, including 9 as data engineer, I offer expert consulting to design and optimize scalable data solutions.

As an O’Reilly author, Data+AI Summit speaker, and blogger, I bring cutting-edge insights to modernize infrastructure, build robust pipelines, and

drive data-driven decision-making. Let's transform your data challenges into opportunities—reach out to elevate your data engineering game today!

👉 contact@waitingforcode.com

🔗 past projects